-

October 16, 2023

HOW TO BUILD AN API IN NODE.JS USING AWS?, API GATEWAY, AND SERVERLESS

The serverless stack nowadays has many attractions, and we’re seeing more and more companies using Serverless framework and architecture to build and deploy web applications. The benefits are enormous — you don’t have to worry about uptime or manage any servers, leaving the developer to focus on the core business logic. It automatically scales depending on the load, and you can deploy applications fast. Finally, you pay-per-execution, which reduces costs because you only pay for the exact amount of time your server-side code runs by the millisecond.

This tutorial will show you how to build a secure REST API for a Notetaking react-redux application using MongoDB database as a service and following a Serverless approach using AWS Lambda, API Gateway, and the Serverless framework. Here at Nordstrom, our primary database platform is not MongoDB. For this quick tutorial, I chose MongoDB because it’s the most popular and less expensive database platform, and it can easily be deployed on AWS, Azure, or GCP. If I were building this REST API for Nordstrom, I would use DynamoDB and keep all the infrastructure and data storage under a single cloud provider, which in our case is AWS.

AWS Lambda is an event-driven, serverless computing platform that executes a function in response to an event. It is basically a Docker container that gets spun up and runs the code. The underlying infrastructure and scaling it up or down to meet the event rate is managed, and you are only charged for the time your code is executed.

PREREQUISITES

In Part 1, we are going to build the backend, and to go through this tutorial, you will need the following:

- AWS account

- Node.js — I’m using v14.16.0

- AWS CLI and configure the profile with aws secret and access key.

- Postman for testing

GETTING STARTED WITH THE SERVERLESS FRAMEWORK

Install Serverless on your machine by running the following command, and this will install Serverless CLI to your system.

npm install serverless -g

Now, we will create the service,

$ sls create -t aws-nodejs -p serverless-restapi && cd serverless-restapi

The command will create all the necessary files and code to create your Lambda functions and API Gateway events inside the project directory.

Inside the

serverless-restapiroot directory create a.envfile to save environmental variables and a.gitignorefile and add.envto it so Git can ignore the.envfile, in this way, you can use store environmental variables, and you will not push any secrets to a repository as long as everything is added to.env.INSTALLING MODULES, INCLUDING DEV DEPENDENCIES.

There are a couple of modules that we need to create, test and deploy this REST API. First, we need

mongoosean Object Data Modeling (ODM) library for MongoDB and Node.js. It manages relationships between data, provides schema validation and many other cool features. We also needdotENVto load environmental variables and secrets, andvalidator— A library of string validators and sanitizers. We will use it to make sure our schema model has a valid value. And Finally, as a dev dependency, we needServerless Offiline pluginto run the code locally and test it before we push everything to AWS. To install these dependencies, run the following commands:$ npm i -y

$ npm i dotenv mongoose validator --save

$ npm i serverless-offline -DCREATE A DATABASE USING MONGODB ATLAS

MongoDB Atlas is a cloud database service for modern applications. You can deploy fully managed MongoDB across any cloud provider, but I am using AWS with the free tier

M0 sandboxcluster for this project.Once the cluster is created, you need to do three things before you start using the database.

- Go to the Security tab and select Database Access, and create a database user. Save the credentials for later.

- Under the same security tab, select Network Access and whitelist any IP addresses.

- Finally, go to the main cluster and click connect — you will have multiple options to connect to the cluster, select connect to your application, then select a driver and version and copy the code and save it in the

.envfile as

DB=mongodb+srv://dbAdmin:<password>@<clustername>.cucs2.mongodb.net/<dbname>

And replace the password, user, and cluster name with the database user name, password, and database name from step 1.

Finally, let’s write some code and start by setting up the database connection and creating a Note model.

CREATE A NOTE MODEL

In the project root directory, create a folder

models/inside the folder, then create a new file and name itNote.jsinside, and add the code below.const mongoose = require('mongoose'); const validator = require('validator'); const NoteSchema = new mongoose.Schema( { title: { type: String, required: true, validator: { validator(title) { return validator.isAlphanumeric(title); }, }, }, description: { type: String, required: true, validator: { validator(description) { return validator.isAlphanumeric(description); }, }, }, reminder: { type: Boolean, require: false, default: false, }, status: { type: String, enum: ['completed', 'new'], default: 'new', }, category: { type: String, enum: ['work', 'todos', 'technology', 'personal'], default: 'todos', }, }, { timestamps: true, } ); module.exports = mongoose.model('Note', NoteSchema);Using the Schema model, we can define and structure our Note model and add some validation. We’ll export the model and use it in the handler.js.

CREATE DATABASE CONNECTION

In the project root directory, create

db.jsand add the code below. This code handles the database connection, and each lambda function will use it.const mongoose = require('mongoose'); mongoose.Promise = global.Promise; module.exports = connectDataBase = async () => { try { const databaseConnection = await mongoose.connect(process.env.DB, { useUnifiedTopology: true, useNewUrlParser: true, useCreateIndex: true, }); console.log(`Database connected ::: ${databaseConnection.connection.host}`); } catch (error) { console.error(`Error::: ${error.message}`); process.exit(1); } };CREATE THE SERVERLESS CONFIGURATION

Now that the database is set up and the project is properly scaffolded, let’s create the resources configuration in the

serverless.ymlfile and create the Create, Read, Update and Delete (CRUD) functionally.service: serverless-restapi frameworkVersion: '2' provider: name: aws runtime: nodejs14.x stage: ${opt:stage} region: us-east-1 lambdaHashingVersion: 20201221 # add 5 function for CURD operation functions: create: handler: handler.create events: - http: path: notes method: post cors: true getOne: handler: handler.getOne events: - http: path: notes/{id} method: get cors: true getAll: handler: handler.getAll events: - http: path: notes method: get cors: true update: handler: handler.update events: - http: path: notes/{id} method: put cors: true delete: handler: handler.delete events: - http: path: notes/{id} method: delete cors: true plugins: - serverless-offline # adding the plugin to be able to run the offline emulationIn this configuration, we added five functions: create, getOne, getAll, update and delete. And they all point to the handler.js file — and that’s all we need to set up API Gateway resources to trigger the Lambda functions and, in the end, add the serverless-offline plugin so we can test locally. We will come back to this file to add the API key and usage plan at the end.

Next, let’s create

handler.jsin the root directory and add the code below.require('dotenv').config({ path: './.env' }); const isEmpty = require('lodash.isempty'); const validator = require('validator'); const connectToDatabase = require('./db'); const Note = require('./models/Note'); /** * Helper function * @param {*} statusCode * @param {*} message * @returns */ const createErrorResponse = (statusCode, message) => ({ statusCode: statusCode || 501, headers: { 'Content-Type': 'application/json' }, body: JSON.stringify({ error: message || 'An Error occurred.', }), }); /** * * @param {*} error Error message */ const returnError = (error) => { console.log(error); if (error.name) { const message = `Invalid ${error.path}: ${error.value}`; callback(null, createErrorResponse(400, `Error:: ${message}`)); } else { callback( null, createErrorResponse(error.statusCode || 500, `Error:: ${error.name}`) ); } }; /** * Notes CURD functions parameters * @param {*} event data that's passed to the function upon execution * - for get note by ID and delete will get the Note ID from the event * @param {*} context sets callbackWaitsForEmptyEventLoop false * @param {*} callback sends a response success or failure * @returns */ module.exports.create = async (event, context, callback) => { context.callbackWaitsForEmptyEventLoop = false; if (isEmpty(event.body)) { return callback(null, createErrorResponse(400, 'Missing details')); } const { title, description, reminder, status, category } = JSON.parse( event.body ); const noteObj = new Note({ title, description, reminder, status, category, }); if (noteObj.validateSync()) { return callback(null, createErrorResponse(400, 'Incorrect note details')); } try { await connectToDatabase(); console.log(noteObj); const note = await Note.create(noteObj); callback(null, { statusCode: 200, body: JSON.stringify(note), }); } catch (error) { returnError(error); } }; module.exports.getOne = async (event, context, callback) => { context.callbackWaitsForEmptyEventLoop = false; const id = event.pathParameters.id; if (!validator.isAlphanumeric(id)) { callback(null, createErrorResponse(400, 'Incorrect Id.')); return; } try { await connectToDatabase(); const note = await Note.findById(id); if (!note) { callback(null, createErrorResponse(404, `No Note found with id: ${id}`)); } callback(null, { statusCode: 200, body: JSON.stringify(note), }); } catch (error) { returnError(error); } }; module.exports.getAll = async (event, context, callback) => { context.callbackWaitsForEmptyEventLoop = false; try { await connectToDatabase(); const notes = await Note.find(); if (!notes) { callback(null, createErrorResponse(404, 'No Notes Found.')); } callback(null, { statusCode: 200, body: JSON.stringify(notes), }); } catch (error) { returnError(error); } }; /** * * @param {*} event * @param {*} context * @param {*} callback * @returns */ module.exports.update = async (event, context, callback) => { context.callbackWaitsForEmptyEventLoop = false; const data = JSON.parse(event.body); if (!validator.isAlphanumeric(event.pathParameters.id)) { callback(null, createErrorResponse(400, 'Incorrect Id.')); return; } if (isEmpty(data)) { return callback(null, createErrorResponse(400, 'Missing details')); } const { title, description, reminder, status, category } = data; try { await connectToDatabase(); const note = await Note.findById(event.pathParameters.id); if (note) { note.title = title || note.title; note.description = description || note.description; note.reminder = reminder || note.reminder; note.status = status || note.status; note.category = category || note.category; } const newNote = await note.save(); callback(null, { statusCode: 204, body: JSON.stringify(newNote), }); } catch (error) { returnError(error); } }; /** * * @param {*} event * @param {*} context * @param {*} callback * @returns */ module.exports.delete = async (event, context, callback) => { context.callbackWaitsForEmptyEventLoop = false; const id = event.pathParameters.id; if (!validator.isAlphanumeric(id)) { callback(null, createErrorResponse(400, 'Incorrect Id.')); return; } try { await connectToDatabase(); const note = await Note.findByIdAndRemove(id); callback(null, { statusCode: 200, body: JSON.stringify({ message: `Removed note with id: ${note._id}`, note, }), }); } catch (error) { returnError(error); } };That’s it, and we are ready to start testing. Open up your terminal and run the following command:

$ sls offline start --skipCacheInvalidation -s devUsing the Serverless Offline plugin, we can emulate ? and API Gateway on our local machine to speed up our development cycles. It helps us save time as we can quickly see logs and debug locally before we push the code to AWS.

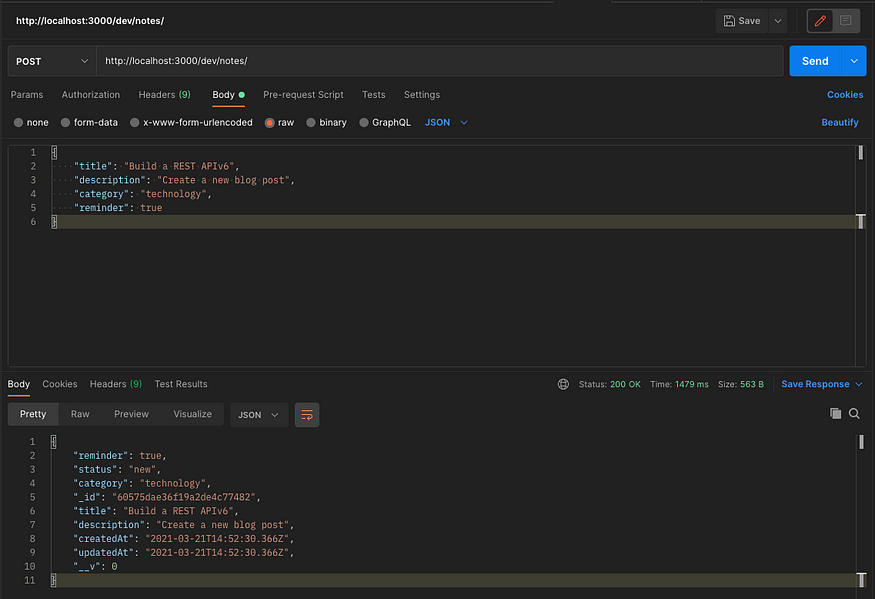

Then open up Postman, and let’s start testing. First, let’s create a note,

Make sure to test all the endpoints, and everything is working as expected. Once the tests are complete, let’s secure? the REST API endpoints and require users to use an API key to make any requests.

SETTING OUR USAGE PLAN, API KEYS, AND THROTTLING

API limiting or rate-limiting is an essential component of Internet security, as DoS attacks can bring down a server with unlimited API requests in a given time. To properly protect our API, we will turn on this feature. To enable rate-limiting and throttling, let’s add a couple of lines to our

serverless.ymlconfiguration..... provider: .... apiGateway: apiKeys: - free: - ${opt:stage}-freekey - paid: - ${opt:stage}-prokey usagePlan: - free: quota: limit: 5000 offset: 2 period: MONTH throttle: burstLimit: 200 rateLimit: 100 - paid: quota: limit: 50000 offset: 1 period: MONTH throttle: burstLimit: 2000 rateLimit: 1000 # for each function add private: true functions: ... - http: .... private: true ....API throttling allows us to control the way our API is used. We have defined a throttle at the API layer, and if it gets triggered, we reduce the response rate.

Inside the

provider:– section, we added an API key and usage plan, and then with the declaration ofprivate: trueinside the functions’ configuration, we are telling serverless that endpoints will be private.Now we are ready to deploy ? by running the following command:

sls deploy -s devJust with this command, serverless will automagically provision resources on AWS, create cloud formation templates and bundles, package up the lambda functions and all the dependencies, and push all code to S3 from where and it will send it to the Lambdas. If everything went well, you should see something similar to this in your terminal:

You have the API endpoints and an API key that we can use to interact with the endpoints securely. Let’s try getting a note using the newly created endpoint.

We are done with Part 1. In Part 2, we will use these endpoints in a frontend React-redux Notetaking application. Stay tuned.

- No comments yet

- By Admin

Comments